[ETA (January 2022): My co-authors James Bell, Linda Linsefors and Joar Skalse and I give a much more detailed analysis of the dynamics discussed in this post in our paper titled “Reinforcement Learning in Newcomblike Environments”, published at NeurIPS 2021.]

The law of effect (LoE), as introduced on p. 244 of Thorndike’s (1911) Animal Intelligence, states:

Of several responses made to the same situation, those which are accompanied or closely followed by satisfaction to the animal will, other things being equal, be more firmly connected with the situation, so that, when it recurs, they will be more likely to recur; those which are accompanied or closely followed by discomfort to the animal will, other things being equal, have their connections with that situation weakened, so that, when it recurs, they will be less likely to occur. The greater the satisfaction or discomfort, the greater the strengthening or weakening of the bond.

As I (and others) have pointed out elsewhere, an agent applying LoE would come to “one-box” (i.e., behave like evidential decision theory (EDT)) in Newcomb-like problems in which the payoff is eventually observed. For example, if you face Newcomb’s problem itself multiple times, then one-boxing will be associated with winning a million dollars and two-boxing with winning only a thousand dollars. (As noted in the linked note, this assumes that the different instances of Newcomb’s problem are independent. For instance, one-boxing in the first does not influence the prediction in the second. It is also assumed that CDT cannot precommit to one-boxing, e.g. because precommitment is impossible in general or because the predictions have been made long ago and thus cannot be causally influenced anymore.)

A caveat to this result is that with randomization one can derive more causal decision theory-like behavior from alternative versions of LoE. Imagine an agent that chooses probability distributions over actions, such as the distribution P with P(one-box)=0.8 and P(two-box)=0.2. The agent’s physical action is then sampled from that probability distribution. Furthermore, assume that the predictor in Newcomb’s problem can only predict the probability distribution and not the sampled action and that he fills box B with the probability the agent chooses for one-boxing. If this agent plays many instances of Newcomb’s problem, then she will ceteris paribus fare better in rounds in which she two-boxes. By LoE, she may therefore update toward two-boxing being the better option and consequently two-box with higher probability. Throughout the rest of this post, I will expound on the “goofiness” of this application of LoE.

Notice that this is not the only possible way to apply LoE. Indeed, the more natural way seems to be to apply LoE only to whatever entity the agent has the power to choose rather than something that is influenced by that choice. In this case, this is the probability distribution and not the action resulting from that probability distribution. Applied at the level of the probability distribution, LoE again leads to EDT. For example, in Newcomb’s problem the agent receives more money in rounds in which it chooses a higher probability of one-boxing. Let’s call this version of LoE “standard LoE”. We will call other versions, in which choice is updated to bring some other variable (in this case the physical action) to assume values that are associated with high payoffs, “non-standard LoE”.

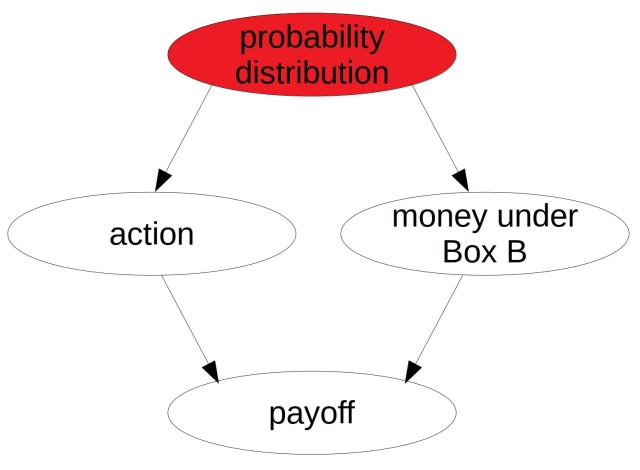

Although non-standard LoE yields CDT-ish behavior in Newcomb’s problem, it can easily be criticized on causalist grounds. Consider a non-Newcomblike variant of Newcomb’s problem in which there is no predictor but merely an entity that reads the agent’s mind and fills box B with a million dollars in causal dependence on the probability distribution chosen by the agent. The causal graph representing this decision problem is given below with the subject of choice being marked red. Unless they are equipped with an incomplete model of the world – one that doesn’t include the probability distribution step –, CDT and EDT agree that one should choose the probability distribution over actions that one-boxes with probability 1 in this variant of Newcomb’s problem. After all, choosing that probability distribution causes the game master to see that you will probably one-box and thus also causes him to put money under box B. But if you play this alternative version of Newcomb’s problem and use LoE on the level of one- versus two-boxing, then you would converge on two-boxing because, again, you will fare better in rounds in which you happen to two-box.

Be it in Newcomb’s original problem or in this variant of Newcomb’s problem, non-standard LoE can lead to learning processes that don’t seem to match LoE’s “spirit”. When you apply standard LoE (and probably also in most cases of applying non-standard LoE), you develop a tendency to exhibit rewarded choices, and this will lead to more reward in the future. But if you adjust your choices with some intermediate variable in mind, you may get worse and worse. For instance, in either the regular or non-Newcomblike Newcomb’s problem, non-standard LoE adjusts the choice (the probability distribution over actions) so that the (physically implemented) action is more likely to be the one associated with higher reward (two-boxing), but the choice itself (high probability of two-boxing) will be one that is associated with low rewards. Thus, learning according to non-standard LoE can lead to decreasing rewards (in both Newcomblike and non-Newcomblike problems).

All in all, what I call non-standard LoE looks a bit like a hack rather than some systematic, sound version of CDT learning.

As a side note, the sensitivity to the details of how LoE is set up relative to randomization shows that the decision theory (CDT versus EDT versus something else) implied by some agent design can sometimes be very fragile. I originally thought that there would generally be some correspondence between agent designs and decision theories, such that changing the decision theory implemented by an agent usually requires large-scale changes to the agent’s architecture. But switching from standard LoE to non-standard LoE is an example where what seems like a relatively small change can significantly change the resulting behavior in Newcomb-like problems. Randomization in decision markets is another such example. (And the Gödel machine is yet another example, albeit one that seems less relevant in practice.)

Acknowledgements

I thank Lukas Gloor, Tobias Baumann and Max Daniel for advance comments. This work was funded by the Foundational Research Institute (now the Center on Long-Term Risk).